- INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA INSTALL

- INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA UPDATE

- INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA FULL

- INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA CODE

- INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA TRIAL

It will (probably) fail because you don't have the tensorflow library

INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA INSTALL

Instead of importing Interpreter from the tensorflow module, you now need toįor example, after you install the package above, copy and run theįile. For these platforms, you should use theīuild the tflite-runtime package from source. Note: We no longer release pre-built tflite-runtime wheels for Windows and

Latest Debian package is for TF version 2.5, which you can install by following

INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA UPDATE

Note: We no longer update the Debian package python3-tflite-runtime. Instead follow the appropriate Coral setup documentation. If you're using TensorFlow with the Coral Edge TPU, you should If you want to run TensorFlow Lite models on other platforms, you should eitherīuild the tflite-runtime package from source. Raspberry Pi 2, 3, 4 and Zero 2 running Raspberry Pi OS The tflite-runtime Python wheels are pre-built and provided for these

INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA FULL

Ops, you need to use the full TensorFlow package instead. If your models have any dependencies to the Select TF TensorFlow Lite Converter, you must install theįor example, the Select TF ops are not included in the Note: If you need access to other Python APIs, such as the tflite models and avoid wasting disk space with the large TensorFlow library. This small package is ideal when all you want to do is execute

INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA CODE

Package and includes the bare minimum code required to run inferences with The tflite_runtime package is a fraction the size of the full tensorflow WeĬall this simplified Python package tflite_runtime. Just the TensorFlow Lite interpreter, instead of all TensorFlow packages. To quickly start executing TensorFlow Lite models with Python, you can install Using the model provided with the example linked below.) About the TensorFlow Lite runtime package (If you don't have a model converted yet, you can experiment All you need is a TensorFlow model converted to TensorFlow This page shows how you can start running TensorFlow Lite models with Python in # new environment will be the name on the environment.ymlĬonda handle pip and conda packages, so there is one more thing to do, run geopyspark install-jar to get the scala jar files for the scala backend part and we’re good to go.Using TensorFlow Lite with Python is great for embedded devices based on Linux, # export active environment to a new file: To recreate this environment in another machine we need to create the environment.yml file. Some of the packages installed in environment geopyspark-env: # Name Version Build Channel # download and install the backend jar file (the scala code)

# install Rasterio with conda-forge channel $ conda create -n geopyspark-env python=3.6 gdal

INSTALL PYSPARK ON UBUNTU 18.04 WITH CONDA TRIAL

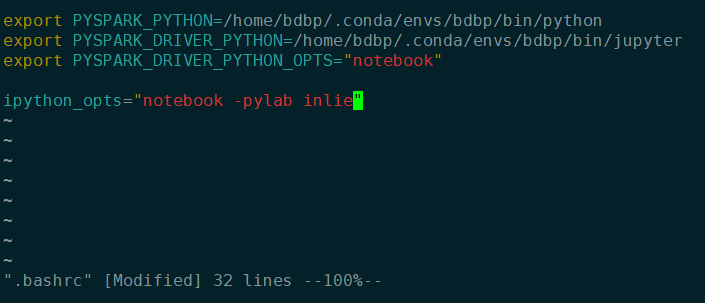

To me is better to install any Python package in a specific environment using Conda.Īfter some trial and error I were able to get a working enviroment with GDAL, Rasterio, and GeoPySpark following this order: # create a new enviroment named geopyspark-env and install GDAL Type Ctrl + d to quit Step #4 Install GeoPySpark (with conda) You’ll see something like this: Welcome to Run Python Spark Shell $ /usr/local/spark/bin/pyspark

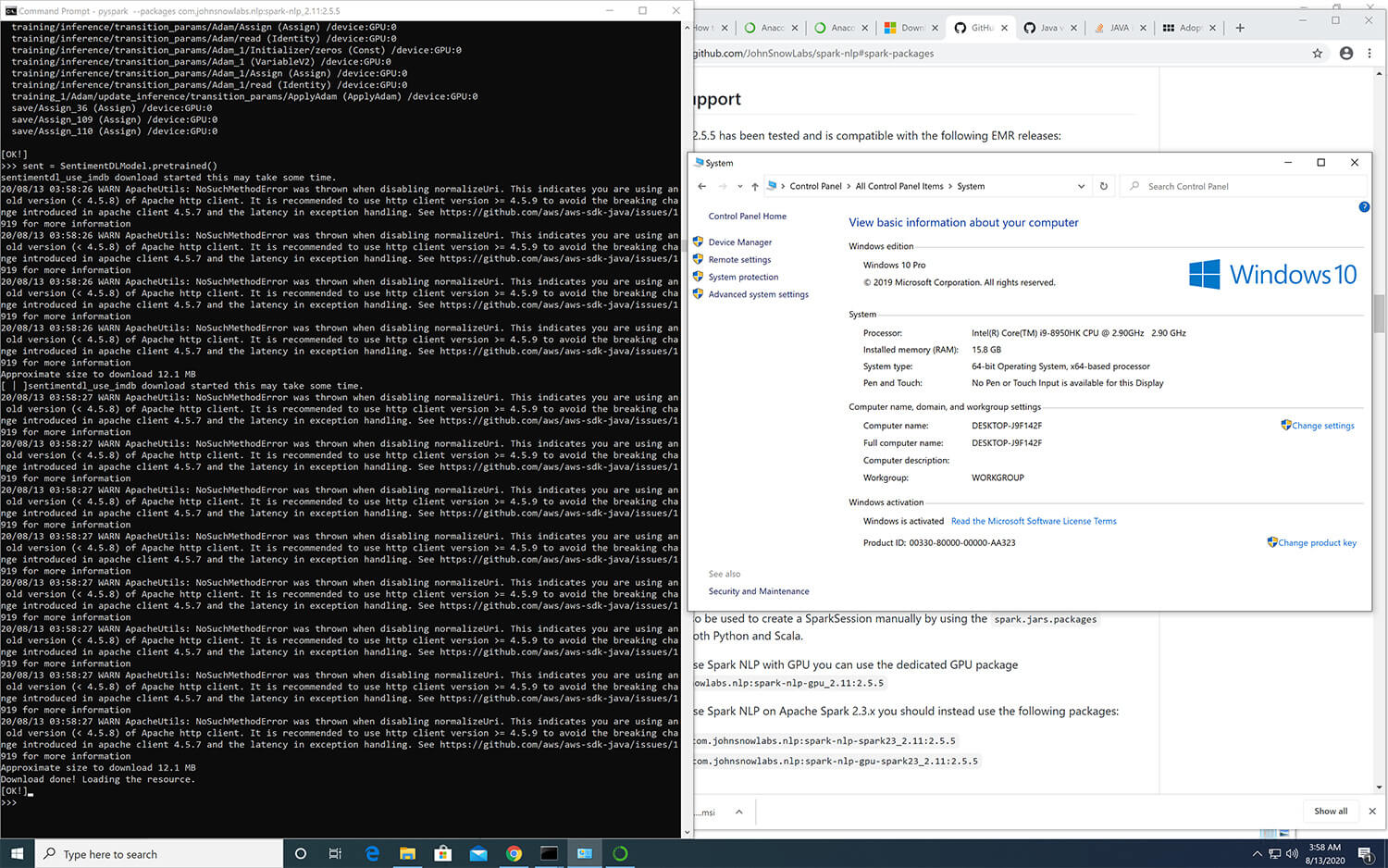

$ sudo nano /usr/local/spark/conf/spark-env.shĪdd these lines: export SPARK_MASTER_IP=127.0.0.1 $ sudo cp spark-env.sh.template spark-env.sh bashrc file: # Set SPARK_HOMEĮdit Spark config file: $ cd /usr/local/spark/conf Or try :help.ĭownload the Apache Spark file, # extract folderĪdd $SPARK_HOME as environment variable for your shell, add this line to the. Step #2 Install Scala $ sudo apt-get install scala bashrc file: # JAVAĮxport JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64 OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)Ĭheck if JAVA_HOME is set echo $JAVA_HOME In addition, Spark needs to be installed and configured RequirementĬheckout if java is installed $ java -version Java 8 and Scala 2.11 are required by GeoTrellis. PySpark itself is a Python binding of, Spark, a processing engine available in multiple languages but whose core is in Scala. Much of the functionality of GeoPySpark is handled by another library, PySpark (hence the name, GeoPySpark). GeoPySpark can do many of the operations present in GeoTrellis. GeoPySpark allows users to work with a Python front-end backed by Scala. GeoPySpark is a Python bindings library for GeoTrellis. It aims to provide raster processing at web speeds (sub-second or less). GeoTrellis is a Scala library that use spark to work with larger amounts of raster data in a distributed environment. This post describe my experience on installing GeoPySpark on Ubuntu 18.04.1 LTS.

0 kommentar(er)

0 kommentar(er)